Blockchain and InterPlanetary File System (IPFS)

Blockchain and InterPlanetary Files System(IPFS)

In this article, we will be going to talk about InterPlanetary File System (IPFS) and Blockchain technologies that will create a web that is distributed, immutable, and secure. This article is for people who would like to learn about IPFS and its integration with Blockchain.

The current web doesn’t impose any security measures by itself. It is up to the provider to think about the consumer’s security, which represents the centralized nature of the web. It also provides no guarantee that the content, which is accessed today will be available tomorrow. It means that the web is mutable.

HTTP is inefficient and expensive. There is only one node from where the data can be accessed. HTTP fetches a file from a single computer at a time, instead of getting pieces from multiple computers simultaneously.

Possible Solution

At its core, the web is just a very large data store. If this store was decentralized, immutable, and provided a way to verify the truthiness or source of data, then this could become a solution that can build a verifiable immutable web. IPFS and Blockchain technologies have these properties. Using them together to build a data storage system could become a solution.

InterPlanetary File System (IPFS)

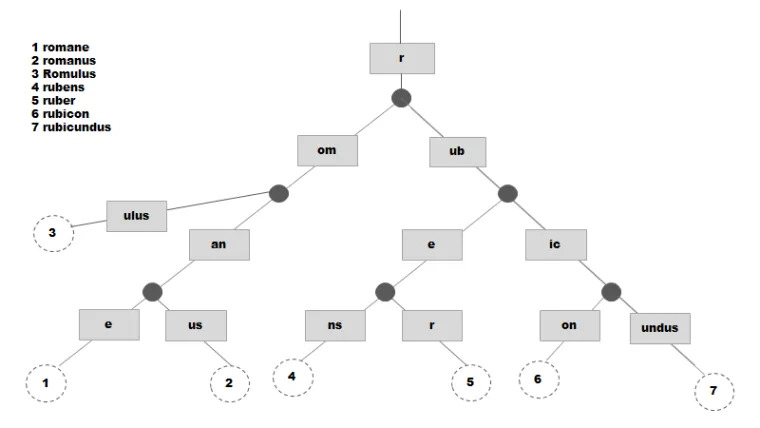

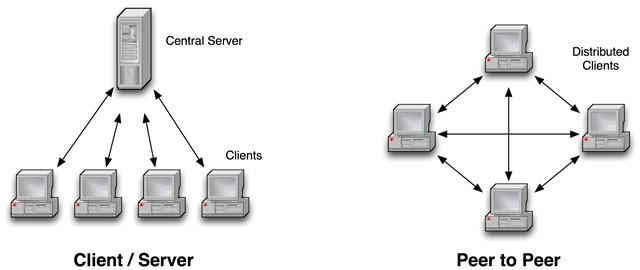

The InterPlanetary File System (IPFS) is a peer-to-peer (P2P) distributed file system that seeks to connect all devices with the same system of files. IPFS is a content-addressed block storage format with content-addressed hyperlinks that allows for high throughput. This formes a data structure upon which one can build versioned file systems, blockchains, and even a permanent web. IPFS combines a distributed hash table, block exchange, and a self-certifying namespace.

Instead of using a location address, IPFS uses a representation of the content itself to address the content. The hash represents a root object. Instead of getting a response from a server, you gain access to the root object’s data. With HTTP you are asking what is at a certain location, whereas with IPFS you are asking where the certain file is.

Note: In the section that follows, we are not going to explain Blockchain in detail, because it is already covered here and here. However, we will be going to talk about Blockchain in terms of making the web that is distributed, immutable, and secure.

Blockchain

The blockchain doesn’t need centralized data storage for maintaining its data.

There are various blockchains with different goals, but these are the common elements:

Replicated ledger. The history of all transactions among nodes in a blockchain is securely stored.

Cryptography. Integrity and authenticity of all transactions shared among the blockchain nodes are supported with digital signatures and specialized data structures. Privacy of transactions is also supported with anonymous addresses for transactions.

Consensus. Transactions that are exchanged among the blockchain nodes need to be validated before adding to the existing blocks. The validation requires unanimity among the blockchain nodes.

P2P networking. All transactions are shared without centralized control over the web, i.e. the blockchain nodes are connected through a P2P network over the web, and not through the client-server model.

Blockchain also has some downsides. The blockchain ensures a strong degree of security for its chain, but the risk of managing private keys exists. Private keys are used in the blockchain to prove ownership of a certain item or data, but they can be lost or stolen.. Another issue is scalability. As the blockchain is immutable, it must maintain a continuously growing list of blocks. As transactions need to be broadcasted to blockchain nodes connected through a P2P network, the network could be easily congested.

Integration of IPFS with Blockchain

It is possible to address large amounts of data with IPFS and to place the immutable, permanent IPFS links into a blockchain transaction.

Blockchain Architecture

Blockchain stored the complete transaction details in the chain. While this data is immutable and replicated across multiple nodes, the size of data becomes a serious source of problems. Storing entire data instructions increases the byte size of the chain and affects the blockchain performance. It decreases replication speed and increases the computation cost.

Possible Solution for Blockchain Data Storing

Instead of storing the entire data, the data can be stored at a different location and an address pointer to that location, while the hash of the data can be stored in the chain.

These are the advantages of this approach:

- Byte size of the chain is kept to its minimum.

- The address pointer and data hash require fewer bytes than the data itself.

- A lightweight chain means that it can be quickly replicated across the network and consensus algorithms can easily work with them.

- Storing the hash of the data enables immutability by giving data verification advantages from the file hash.

This architecture can be divided into two parts. The first one deals with the storage of data in the IPFS network and indexing it in the blockchain. The second part deals with the retrieval of data from IPFS using the indexed hash from the blockchain.

Specification

The basic working implementation of this approach requires a blockchain and an IPFS daemon working on a network. A simple blockchain, a simple proof of work algorithm, and a consensus algorithm to resolve conflicts. The IPFS daemon can be installed on nodes and the daemon can be started to interact with it.

Indexing and Storing Data

The steps for indexing and storing data are as follows:

The file is encrypted using a private key. File data can be secured by the author using an encryption system. Since the stored files are open, if the author wants to restrict access to its content, it has to be encrypted.

Result file is hashed. IPFS produces an SHA-256 hash of the file. The file is stored in the network, and a hash is returned. This unique hash is used to identify and retrieve the file from the network. Returned file hash is saved in a transaction in the blockchain. A block is created periodically, which preserves the contents of the chain.

Retrieving Indexed Hash and Data

The steps for retrieving indexed hash and data are as follows:

Get file hash from the blockchain. To identify and retrieve a file from IPFS, the file hash is required. File hash is taken from the blockchain.

Get encrypted file from IPFS using file hash. A GET method for the file with the file hash can be requested to the IPFS network which will return the file. The name of the file will be its hash.

Decrypt a file using the private key. If the file was initially encrypted before saving, it has to be decrypted first before it is ready for use.

Conclusion

The proof of work consensus mechanisms have slowed transaction speed. This makes storing data or large files on the blockchain not feasible. The IPFS is a P2P distributed file system that seeks to connect all devices with the same system of files.

The drawback of the blockchain is that it can’t hold large-sized data. Large-sized data slows the cloning and storing process and hence slows the entire network. This has been fixed by introducing IPFS storage to store the data and pass on the immutable URLs pointing the data to the chain. The files are encrypted before storage and decrypted on retrieval by an authentic user.

In this article, we have seen the following:

- A possible solution for making the web decentralized, immutable, and secure.

- Basics of InterPlanetary File System (IPFS).

- Basics of blockchain in terms of making the web that is distributed, immutable, and secure.

- Integration of IPFS with Blockchain.

Credit: Nemanja Grubor

Blockchain Bridges

Blockchain Bridges

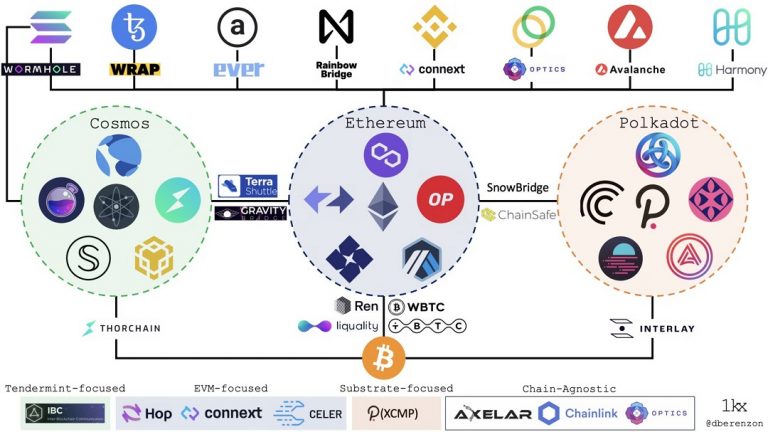

We have now reached a multi-chain market structure after years of research and development. Over 100 public blockchains are currently in use, and many of them have special uses, users, geographic distributions, security methods, and architectural trade-offs. Contrary to what some communities may think, the cosmos actually has an entropy tendency, and as a result, there will probably be more of these networks in the future. This kind of market structure makes interoperability between these many networks necessary. As a result of this realization by many developers, the number of blockchain bridges that aim to integrate the more dispersed environment has exploded in the past year. There are more than 40 separate bridge projects underway as of this writing.

Interoperability unlocks innovation

As individual ecosystems grow, they design their own original strengths, for example, better security, faster throughput, less expensive transactions, greater privacy, particular resource provisioning (e . g . storage, compute, bandwidth), and territorial developer & user communities. Bridges are essential because they allow users to permission for new platforms, protocols to interoperate with one another, and developers to collaborate on creating new products. More specifically, they allow :

Unlocking new features and use cases for users and developers

- Bridges give users and developers more choices. For example:

- Arbitrage SUSHI prices across DEXs on Optimism, Arbitrum, and Polygon

- Pay for storage on Arweave using Bitcoin

- Join a PartyBid for an NFT on Tezos

Greater productivity and utility for existing cryptoassets

Bridges enable existing crypto assets to travel to new places and do new things. For example:

- Sending DAI to Terra to buy a synthetic asset on Mirror or earn a yield on Anchor

- Sending a TopShot from Flow to Ethereum to use as collateral on NFTfi

- Using DOT and ATOM as collateral to take out a DAI loan on Maker

Greater product capabilities for existing protocols

Bridges expand the design space for what protocols could achieve. For example:

- Yearn vaults for yield farming on Solana and Avalanche

- Cross-chain shared order books for NFTs on Ethereum and Flow for Rarible Protocol

- Proof-of-Stake indices for Index Coop

Bridges

At an abstract level, one could define a bridge as a system that transfers information between two or more blockchains. In this context, “information” could refer to assets, contract calls, proofs, or state. There are several components to most bridge designs:

- Monitoring: There is usually an actor, either an “oracle”, “validator”, or “relayer”, that monitors the state on the source chain.

- Message passing/Relaying: After an actor picks up an event, it needs to transmit information from the source chain to the destination chain.

- Consensus: In some models, the consensus is required between the actors monitoring the source chain in order to relay that information to the destination chain.

- Signing: Actors need to cryptographically sign, either individually or as part of a threshold signature scheme, information sent to the destination chain.

It is important to note that any given bridge is a two-way communication channel that may have separate models in each channel and that this categorization doesn’t accurately represent hybrid models like Gravity, Interlay, and tBTC since they all have light clients in one direction and validators in another. In addition, one could roughly evaluate a bridge design according to the following factors:

- Security: Trust & liveness assumptions, tolerance for malicious actors, the safety of user funds, and reflexivity.

- Speed: Latency to complete a transaction, as well as finality guarantees. There is often a tradeoff between speed and security.

- Connectivity: Selections of destination chains for both users and developers, as well as different levels of difficulty for integrating an additional destination chain.

- Capital efficiency: Economics around capital required to secure the system and transaction costs to transfer assets.

- Statefulness: Ability to transfer specific assets, more complex state, and /or execute cross-chain contract calls.

Issues with Bridges

Building robust cross-chain bridges is an incredibly difficult problem in distributed systems. While there is a lot of activity in the space, there are still several unanswered questions:

- Finality & rollbacks: How do bridges account for block reorgs and time bandit attacks in chains with probabilistic finality? For example, what happens to a user who sent funds from Polkadot to Ethereum if either chain experiences a state rollback?

- NFT transfers & provenance: How do bridges preserve provenance for NFTs that are bridged across multiple chains? For example, if there is an NFT that is bought & sold across marketplaces on Ethereum, Flow, and Solana, how does the record of ownership account for all of those transactions and owners?

- Stress testing: How will the various bridge designs perform under times of chain congestion or protocol- & network-level attacks?

The future of blockchain bridges

While bridges unlock innovation for the blockchain ecosystem, they also pose serious risks if teams cut corners with research & development. The Poly Network hack has demonstrated the potential economic magnitude of vulnerabilities & attacks, and I expect this to get worse before it gets better. While it is a highly fragmented and competitive landscape for bridge builders, teams should remain disciplined in prioritizing security over time-to-market. While an ideal state would have been one homogeneous bridge for everything, it is likely that there is no single “best” bridge design and that different types of bridges will be best fit for specific applications (e.g. asset transfer, contract calls, minting tokens).

Furthermore, the best bridges will be the most secure, interconnected, fast, capital-efficient, cost-effective, and censorship-resistant. These are the properties that need to be maximized if we want to realize the vision of an “internet of blockchains”.

It is still early days and the optimal designs have likely not yet been discovered. There are several interesting directions for research & development across all bridge types:

- Decreasing costs of header verification: Block header verification for light clients is expensive and finding ways to decrease those costs could bring us closer to fully generalized and trustless interoperability. One interesting design could be to bridge to an L2 to decrease those costs. For example, implementing a Tendermint light client on zkSync.

- Moving from trusted to bonded models: While bonded validators are much less capital efficient, “social contracts” are a dangerous mechanism to secure billions of dollars of user funds. Additionally, fancy threshold signature schemes don’t meaningfully reduce trust in these systems; just because it’s a group of signers doesn’t remove the fact that it is still a trusted third party. Without collateralization, users are effectively handing over their assets to external custodians.

- Moving from bonded to insured models: Losing money is bad UX. While bonded validators & relayers disincentivize malicious behavior, protocols should take it one step further and reimburse users directly using slashed funds.

- Scaling liquidity for liquidity networks: These are arguably the fastest bridges for asset transfer, and there are interesting design trade-offs between trust and liquidity. For example, it is possible to enable liquidity networks to outsource capital provisioning with a bonded validator style model where the router can also be a threshold multisig with bonded liquidity.

- Bridge aggregation: While bridge usage will likely follow a power law for specific assets and corridors, aggregators like Li Finance could improve UX for both developers and end-users.

From the article of Dmitriy Berenzon

What is CBDC?

What is CBDC?

A CBDC abbreviated as Central Bank Digital Currency is the sovereign equivalent of private cryptocurrencies and digital assets like Bitcoin, Ethereum, Solana, and Ripple. It would be issued and controlled by a country’s Central Bank and used by people and businesses for retail payments, much like cash but in digital form. CBDCs will also be used for wholesale settlements in the interbank market.

In simple words, we can say that CBDC is a token on the blockchain and is issued by the central government by which people can do the day-to-day financial activity, Similar to that of fiat currency. For example, the Government of India will issue its own CBDC i.e., an Indian Rupee token similar to any other private Cryptocurrency like Bitcoin, Ethereum, Ripple, Solana, Polkadot, etc.

CBDC represents a new technology and approach for the issuance of central bank money, and can be characterized by the following:

Digital assets: CBDC is a digital asset, meaning that it is accounted for in a single ledger (distributed or not) that acts as the single source of truth.

Central bank-backed: CBDC represents a claim against the central bank, just as banknotes do.

Central bank controlled: The supply of CBDC is fully controlled and determined by the central bank.

There can be two types of CBDC:

Wholesale CBDC: CBDC that would be used to facilitate payments between banks and other entities that have accounts at the central bank itself.

Retail CBDC: CBDC is used for retail payments, for example between individuals and businesses, and is akin to digital banknotes.

Architecture:

Benefits of CBDC:

Cross Border Payments: A central bank digital currency (CBDC) can boost innovation in cross-border payments, making these transactions instantaneous and helping overcome key challenges relating to time zone and exchange rate differences

Enhance existing payments infrastructure: A central bank digital currency (CBDC) will Increase the speed and efficiency of payments while reducing costs and failure rates

Maintain control: Ensure Central Banks retain sovereignty over monetary policy and not allow alternative currencies to dominate the market.

According to the BIS, today some 80% of central banks are looking at CBDC, with the majority of them considering blockchain as the underlying technology. While many of these banks have expressed interest in both wholesale and retail use cases, most of the admittedly few actual experiments or pilots carried out to date have focused on wholesale. These include Project Ubin by the Monetary Authority of Singapore, Project Khokha by the South African Reserve Bank, China’s DC/EB, and Project Stella, a joint research project by the ECB and the Bank of Japan.

What is Substrate?

What is Substrate?

Substrate is a blockchain framework that is open-source. Substrate includes all of the essential components for constructing a distributed blockchain network. Database, Networking, Transaction Queue, and Consensus are just a few examples.

Although these layers are extensible, Substrate considers that the average blockchain developer is unconcerned about the implementation specifics of these key components. Substrate makes it as simple and flexible as possible to design a blockchain’s state transition function. Substrate runtime is the name given to this layer.

Substrate Components

We assume that you have a basic knowledge of Blockchain i.e, about blockchain nodes, its production, finalization, etc. So we will now see how Substrate works as a foundation for creating a blockchain. The Substrate framework’s initial claim to fame is that it is extensible. This means it makes as few assumptions as possible about how your blockchain is designed and tries to be as general as possible.

Database

The heart of a blockchain, as we’ve seen, is its shared ledger, which must be maintained and kept. Substrate makes no assumptions about the data in your blockchain’s content or structure. A modified Patricia Merkle tree (trie) is implemented on top of the underlying database layer, which employs simple key-value storage. This unique storage structure allows us to quickly determine whether or not an object is stored there. This is especially crucial for light clients, who will rely on storage proofs to deliver lightweight but trustworthy interactions with the blockchain network.

Networking

A peer-to-peer networking protocol must be established for a decentralized blockchain system to communicate. Libp2p is a modular peer-to-peer networking stack used by Substrate. Substrate-based blockchains can share transactions, blocks, peers, and other system vital details without the use of centralized servers thanks to this networking layer. Libp2p is unusual in that it makes no assumptions about your specific networking protocol, which is in accordance with Substrate’s philosophy. As a result, alternative transports can be implemented and used on top of a Substrate-based blockchain.

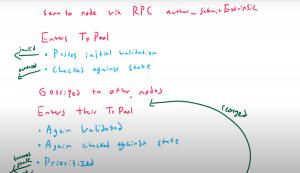

Transaction Queue

As previously stated, transactions are gathered and organized into blocks, which determine how the state of the blockchain changes. However, the order in which these transactions are completed might have an impact on the ledger’s final state. Substrate gives you complete control over transaction dependencies and queue management on your network. Substrate simply assumes a transaction has a weight and a set of prerequisite tags for creating dependency graphs. In the simplest situation, these dependence graphs are linear, but they can get more complex. Substrate takes care of all of the intricacies for you.

Consensus

Remember that a blockchain network can reach a consensus on updates to the chain in a variety of ways. Traditionally, these consensus engines have been inextricably linked to the rest of the blockchain. Substrate, on the other hand, has gone to great lengths to establish a consensus layer that can be easily altered during development. In fact, it was designed in such a way that when the chain went live, consensus could be hot-swapped! Multiple consensus engines, including standard Proof of Work (PoW), Aura (Authority Round), and Polkadot consensus, are built into Substrate. Polkadot consensus is unusual in that it isolates the block production process (BABE) from the block finalization process (GRANDPA).

Substrate Runtime

So far, we’ve covered all of the key blockchain components that Substrate offers. The substrate has made every attempt to be as general and extendable as possible, as you have seen. The modular runtime, on the other hand, is likely the most configurable aspect of Substrate. Substrate’s state transition function, which we discussed earlier, is the runtime.

Substrate thinks that the common blockchain developer doesn’t need to be concerned with the above-mentioned blockchain components. The implementation details are generally unimportant as long as the components have been battle-tested and are ready for production. The underlying blockchain logic that defines what is and is not acceptable for a network, on the other hand, is frequently crucial for any chain.

As a result, Substrate’s main goal is to make blockchain runtime development as simple and versatile as possible.

Substrate Runtime Module Library (SRML)

The runtime of a Substrate is separated into logical components known as runtime modules. These modules will be in charge of some components of the blockchain’s on-chain functionality. These modules can be thought of as “plug-ins” for your system. As a Substrate developer, you have complete control over whatever modules and features you choose to include in your chain.

The currency of the chain, for example, is managed by a module called “Balances.” There are other modules for decision-making and governance for the chain, such as “Collective,” “Democracy,” and “Elections.” There’s even a “Contracts” module that can convert any Substrate-based chain into a smart contract platform. When you develop on Substrate, you get these kinds of modules automatically.

However, Substrate’s modules are not the only ones available. Developers can easily create their own runtime modules, either as standalone logical components or by interacting directly with other runtime modules to create more complex logic. I believe that, in the long run, Substrate’s module structure will function similarly to a “app store,” where users can simply pick and choose whatever features they wish to include, and construct a distributed blockchain network with minimal technical knowledge!

Forkless Runtime Upgrades

If we consider the Substrate module ecosystem to be similar to an app store, we must also consider how we update our runtime. The substrate has made changing your runtime a first-class operation, whether it’s for bug patches, general enhancements to current modules, or even new features you wish to add to your blockchain.

Changes to your chain’s state transition function, on the other hand, affect the network’s consensus. If one node on your network has one version of your runtime logic and another has another, the two nodes will be unable to reach an agreement. They will have fundamental disagreements about the true state of the ledger, resulting in a fork, as we stated earlier. Irreconcilable forks are problematic because they weaken your network’s security by requiring only a small number of nodes to accurately construct and validate new blocks.

The substrate has addressed this problem by allowing the network to agree on the runtime logic! We can put the Substrate runtime code on the blockchain as part of the shared ledger using the Wasm binary format. This means that anyone running a node can check to see if their node has the most up-to-date logic. If it doesn’t, it will directly execute the on-chain Wasm! This means that your blockchain may be upgraded in real-time, on a live network, without forking!

To understand more in-depth about the basics of Substrate Codes you can visit here.

What are Aura, Babe, POW, and Grandpa in Substrate/Polkadot?

What are Aura, Babe, Grandpa, and POW in Substrate/Polkadot?

There are different ways that a blockchain network can come to a consensus about changes to the chain. Similarly, Aura, Babe, Grandpa, and Proof Of Work(POW) are all different types of Consensus mechanisms in Substrate/Polkadot. These consensus layers are designed such that they can be easily changed during development and could even be hot-swapped after the chain goes live!

Let’s talk about all these in detail.

AURA(Authority Round)

Aura primarily provides block authority. In Aura, a known set of authorities are allowed to produce blocks. The authorities must be chosen before block production begins and all authorities must know the entire authority set. While for the production of blocks time is divided into “slots” of a fixed length. During each slot, one block is produced, and authorities take turns producing blocks in order forever.

In Aura, forks only happen when it takes longer than the slot duration for a block to traverse the network. Thus forks are uncommon in good network conditions.

BABE(Blind Assignment for Blockchain Extension)

Babe also primarily provides block authoring. It’s a slot-based consensus mechanism with a known set of validators, similar to Aura. Furthermore, each validator is given a weight, which must be assigned before block production can begin. The authorities, unlike Aura, do not follow a strict order. Instead, each authority uses a VRF to create a pseudorandom number throughout each round. They are allowed to make a block if the random number is less than their weight.

Forks are more prevalent in Babe than in Aura, and they happen even in ideal network conditions, because many validators may be able to create a block during the same slot.

Substrate’s implementation of Babe also has a fallback mechanism for when no authorities are chosen in a given slot.

Proof-Of-Work(POW)

It also provides block authoring unlike BABE and Aura, it is not slot-based and does not have a known authoring set. In Proof Of Work, anyone can produce a block at any time, so long as they can solve a computationally challenging problem(typically a hash preimage search). The difficulty of this problem can be tuned to provide a statistical target block time.

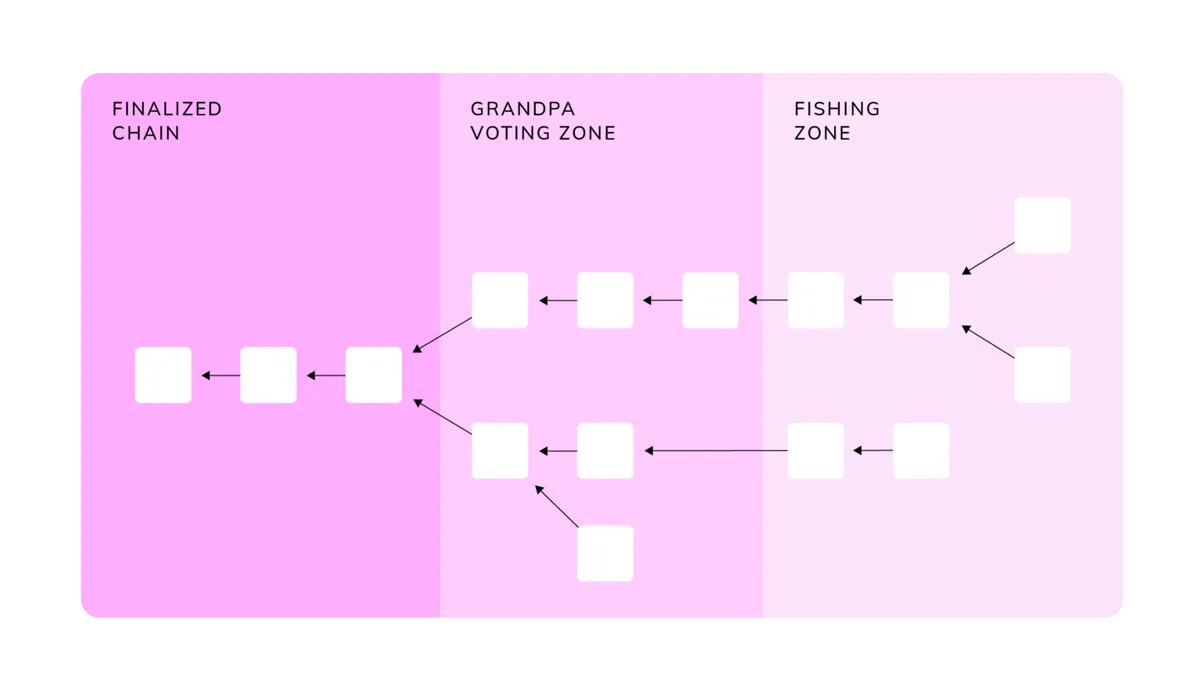

GRANDPA(GHOST-based Recursive ANcestor Deriving Prefix Agreement)

It Provides block finalization. It has a known weighted authority set like BABE. However, GRANDPA doesn’t author blocks. It Just listens to gossip about blocks that have been produced by some authoring engine like the three mentioned above.

It works in a partially synchronous network model as long as 2/3 of nodes are honest and can cope with 1/5 Byzantine nodes in an asynchronous setting.

GRANDPA distinguishes itself by reaching agreements on chains rather than blocks, which speeds up the finalization process significantly, even after long-term network partitioning or other networking difficulties.

Each authoring participates in two rounds of voting on blocks. Once 2/3 of the GRANDPA authorities have voted for a particular block, it is considered finalized.

What is Decentralized Identity?

What is Decentralized Identity?

Decentralized Identity is an emerging concept, in which control is given to the consumers through the use of an identity wallet, through which they collect verified information about themselves from certified issuers.

In this article, we’ll be looking at DIDs — what they are, DID documents, Verifiable data, and how they work.

I’d also try to explain why we use DIDs, and what problems they propose to solve.

The problem

Secrets such as passwords, and encryption keys, are used to assist in protecting access to resources such as computing devices, customer data, and other information. Unauthorized access to resources can cause significant disruption and/or negative consequences. Many solutions have definitely been proposed to protect these secrets and in turn, protect the security and privacy of software systems. Each of these solutions, according to research by Zakwan Jaroucheh, follows the same approach, where, once the consumer receives the secret, it can be leaked and be used by any malicious actor. Time and time again, we’ve heard cases of compromised private information, leading to the loss of billions of dollars.

How then can we decentralize secret management, such that the secret won’t have to be sent to the consumer? I guess I can say… This is where DIDs come in.

First, let’s define Identity.

Identity is the fact of being who or what a person or thing is defined by unique characteristics. An identifier on the other hand is a piece of information that points to a particular identity. It could be named, date of birth, address, email address, etc.

A decentralized identifier is an address on the internet that someone, referred to as Subject, which could be you, a company, a device, a data model, thing, can own and direct control. It can be used to find a DID document connected to it, which provides extra information for verifying the signatures of that subject. The subject (which may be you) can update or remove the information on the DID document directly.

For instance, if you’re on Twitter, you likely own a username, take a DID as your username on Twitter. However, in the case of a DID, the username is randomly generated. Other information about you is accessible through your username (DID document), and you have the ability to update this information over time.

Each DID has a prefix that it references, called DID Method. This prefix makes it easy to identify its origin or where to use it for fetching DID documents. For instance, a DID from the Sovrin network begins with did:sov while one from Ethereum begins with did:ethr. Find the list of registered DID prefixes here.

Let’s briefly look at some of the concepts you’ll likely come across when learning about DIDs.

DID Document

In a nutshell, a DID document is a set of data that describes a Decentralized Identifier. According to JSPWiki, A DID Document is a set of data that represents a Decentralized Identifier, including mechanisms, such as Public Keys and pseudonymous biometrics, that can be used by an entity to authenticate itself as the W3C Decentralized Identifiers. Additional characteristics or claims describing the entity may also be included in a DID Document.

DID Method

According to W3C, a DID method is defined by a DID method specification, which specifies the precise operations by which DIDs and DID documents are created, resolved, updated, and deactivated. The associated DID document is returned when a DID is resolved using a DID Method.

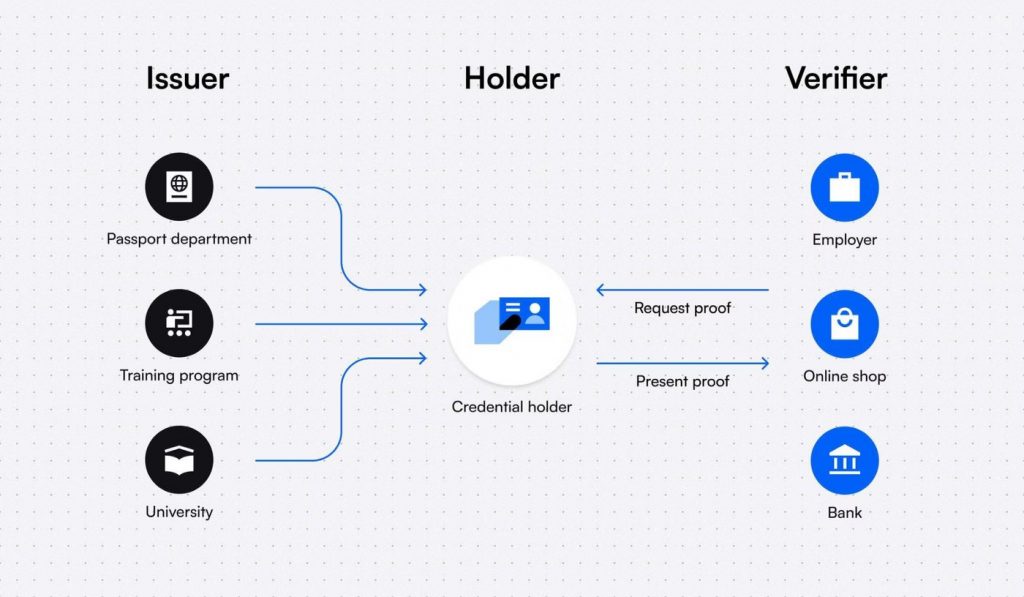

Verifiable Credentials

When you hear of verifiable credentials (VCs), what comes to mind? Probably your passport, license, certifications, and any other identification you might have.

This has to do with the physical world. Digitally, if someone wants to verify or examine your identity how can they do this? A verifiable credential in the simplest term is a tamper-proof credential that can be verified cryptographically.

A verifiable credential ecosystem consists of three entities:

- The Issuer

- The Holder

- The Verifier

The entity issuing the credential is known as the issuer; the entity for whom the credential is issued is known as the holder, and the entity determining whether the credential satisfies the requirements for a VC is known as the verifier.

For example, say a school certifies that a particular individual has taken the degree exams and this information is verified by a machine for its authenticity.

Here, the issuer is the school, the holder is the individual who has taken the exam, and a verifier is a machine that checks the verifiable presentation for its authenticity. Once verified, the holder is free to share it with anyone he/she wishes.

I hope you’re able to get it up to this point.

Let’s take a dive into some of the reasons for decentralized identity.

Following his critique of web 3.0, Jack Dorsey, the former CEO of Twitter, introduced the web 5.0 initiative. By claiming that ownership is still a myth since venture capitalists and limited partnerships will take on a sizable chunk of the web, Dorsey highlighted the current constraints in web 3.0. He claimed that web 3.0 would keep a lot of things centralized, necessitating the creation of web 5.0.

One of the prime use cases for web 5 is empowering users with control of their identity, which we all refer to as Decentralized Identity, used interchangeably with Self-Sovereign Identity (SSI. It is an approach to digital identity that gives individuals control of their digital identities. Why did Jack introduce web 5? Why do more people want to take back control of their data through decentralization and blockchain? What benefits does this hold for people and organizations?

Benefits of decentralized identity for Organizations

- Decentralized Identities allow organizations to verify information instantly without having to contact the issuing party, like a driver’s licensing organization or university, to ensure that IDs, certificates, or documents are valid. It takes a lot of time, sometimes, weeks and months to manually very credentials, which slows down recruitment and processing times while using a lot of financial and human resources. By scanning a QR code or putting it through a credential validator tool, we can quickly and easily validate someone’s credentials with DIDs. Here is a typical example of how a company can leverage decentralized identity technology to hire efficiently:

* Anita, a job applicant, manages her decentralized identity and Verifiable Credentials on her phone with a Wallet and wants to apply for the company looking for a community manager. * She attended a boot camp that gave her a community management degree that she keeps in her digital wallet as a Verifiable Credential that can't be faked. * The company makes a job offer and they just need to check that her certificate is authentic. * The company requests her data and she is prompted on her phone to give authorization to the company to show her certificate * The company receives a QR code and simply scans it to instantly confirm that her community management certificate is authentic. * They offer Anita the job.The traditional, manual verification process would have taken several weeks or months to achieve the same outcome.

- DIDs enable issuing organizations to conveniently provide Verifiable Credentials to people and prevent fraud which in turn, greatly reduces costs and increases efficiency. Many people, even in positions with a lot of risks, use forged or fraudulent certificates to apply for jobs. A university can issue fraud-proof credentials, which the recruiting organizations can easily verify, thereby reducing the possibility of forgery.

Benefits of decentralized identity for Individuals

- Decentralized identity increases individual control of identifying information. Without relying on centralized authority and third-party services, decentralized IDs and attestations can be validated.

- People can choose the details they want to share with particular entities, including the government or their employment.

- Decentralized identity makes identity data portable. Users can exchange attestations and IDs with anybody they choose by storing them in their mobile wallets. Decentralized identities and attestations are not stored in the issuing organization’s database permanently. Assume that someone called Anita has a digital wallet that helps her to manage authorizations, IDs, and data for connecting to different applications. Anita can use the wallet to enter her sign-in credentials with a decentralized social media app. She wouldn’t need to worry about making a profile because the app already recognizes her as Anita. Her interactions with the app will be stored on a decentralized web node. What Anita can do now is, switch to other social media apps, with the social persona she created on the present social media app.

- Decentralized identity enables anti-Sybil mechanisms to identify when one individual human is pretending to be multiple humans to game or spam some system. I t frequently becomes impractical to log in several times without the system noticing a duplicate as the user will need to use identical credentials each time.

Conclusion

Decentralized Identity has a lot of pros, and so many individuals and organizations are already keying into it. A lot of companies like Spruce ID, Veramo, Sovrin, Unum ID, Atos, etc have worked hard to create decentralized identity solutions. I hope to see where these efforts lead and look forward to seeing DIDs become more used in a bunch of applications as well.

For further reading, feel free to check out these resources

- https://identity.foundation/faq/

- https://www.gsma.com/identity/decentralised-identity

- https://venturebeat.com/2022/03/05/decentralized-identity-using-blockchain/

From the article of Amarachi Emmanuela Azubuike

Understanding Basic Substrate Code

Understanding Basic Substrate Code

- ChainSpec.rs: The chainspec file is responsible for the initial fundamental root of the chain. From there we can do the following things:

- We can add or manage accounts that we will get during the starting of the chain.

- How We can generate a new account.

- We can pre-fund the account. So that we can make transactions on the chain.

- We can add any other prerequisite related to any pallet if the pallet needs it.

- Basically, we can do everything which we need on the chain during starting(Block 1) of the chain.

- Cli.rs: The cli file is responsible for all the customization of commands to interact with the chain. Like

- How the block will generate instant-seal, manual, or default.

- How we can build the spec for the chain, purge the chain, and many more.

- Basically whatever we can do with the chain by the help of commands.

- Command.rs: It is the extended or helper file of the cli one.

- Main.rs: It is the main file just for instantiation purposes of cli.

- Rpc.rs: The RPC file is responsible for all the methods or customization related to RPC(Remote Procedure Call). Like

- How the block will generate instant-seal, manual, or default.

- How we can build the spec for the chain, purge the chain, and many more.

- Basically whatever we can do with the chain by the help of commands.

- Service.rs: The service file is responsible for the main business logic for the block generation. Like

- Consensus protocol.

- How the block will generate differently for the different commands like manual, or instant seal.

- How the chain will interact between nodes.

- And all the things related to the database, telemetry, RPC, finality, and much more. It basically takes the configuration from the Runtime module which we do with respect to any pallet.

- Runtime: The Runtime is responsible for the coupling and config customizations of the pallets. It has the lib.rs which has the main code and also the benchmarking and other files also come in it.

- Lib.rs: This is the file where we can do the coupling and customization. Let’s understand one by one:

- Firstly it has all the basic configurations related to runtime like chain name, chain version, etc.

- We need to customize/implement all the pallets we are using in the chain. Like frame_system, grandpa, aura, timestamp, sudo, etc

- We create the chain runtime by adding all the pallets into it.

construct_runtime!( pub enum Runtime where Block = Block, NodeBlock = opaque::Block, UncheckedExtrinsic = UncheckedExtrinsic { System: frame_system, RandomnessCollectiveFlip: pallet_randomness_collective_flip, Timestamp: pallet_timestamp, Aura: pallet_aura, Grandpa: pallet_grandpa, Balances: pallet_balances, TransactionPayment: pallet_transaction_payment, Sudo: pallet_sudo, } ); - Lib.rs: This is the file where we can do the coupling and customization. Let’s understand one by one:

- We can also add more business logic respective to any pallet or node.

- We also implement the different traits for different transactions on the node. Like execute the block, finalize block, validate transactions, etc.

Basically, we can customize the block on two levels: Level 1: At this level, we can only do the validation customizations and these can be done in the pallet. Level 0: On this level, we can customize the structure or basic behavior of the block. We can segregate these customizations into two parts.

Basically, we can customize the block on two levels: Level 1: At this level, we can only do the validation customizations and these can be done in the pallet. Level 0: On this level, we can customize the structure or basic behavior of the block. We can segregate these customizations into two parts.- Type 1: The changes we covered on the service .rs

- Type 2: The changes which we can make from runtime.

- We can update the time of the block generation from the configuration of the timestamp pallet. Same as we can do more customizations with the help of other pallets.

- We can customize the block in the transaction pool.

- We can add the conditions/validations in the different block transaction methods like execute the block, finalize block, etc.

- Above changes, we can do in the implementation of the traits related to it. Along with that if we want to customize the block we can look at the other methods in the traits of the executive file(link).

Blockchain Jargons

Blockchain Jargons

As a blockchain developer, you must learn about different technologies and trends that have dominated the blockchain space. This blog post will walk you through sidechains, atomic transactions, atomic swaps, non-custodial wallets, Layer 2 blockchain solutions, IDOs, and Turing Complete smart contracts.

Make sure to understand each concept because many blockchain companies expect blockchain developers to understand the concepts that have shaped the blockchain space.

Let’s look at the first concept, which is atomic transactions.

Atomic Transactions and Why Use Them?

An atomic transaction allows you to group multiple transactions to be submitted at one time. If any of the transactions in this group fails, then all other transactions in this group fail as well. In other words, an atomic transaction guarantees that all transactions succeed or fail.

Now you might wonder why developers would want this functionality? For instance, this is particularly useful for decentralized exchanges. When both parties agree to exchange assets, they both create a transaction and group it as an atomic transaction. Therefore, if one transaction fails, an atomic transaction guarantees that the other transaction will also fail. This characteristic is important because you don’t want to send an asset to an unknown person without receiving the promised asset in return.

Atomic Swap

Smart contracts facilitate atomic swaps, which allow users to exchange assets without using a centralized exchange. Most often, two different blockchain platforms would use an atomic swap contract to make their tokens interoperable.

These contracts would use Hash Timelock Contracts (HTLC), which are time-bound smart contracts that require the involved parties to confirm the transaction within a specified timeframe. If this happens, the transaction succeeds, and the contract exchanges the funds. Thus, it allows users to safely exchange tokens without using a centralized exchange.

However, this technology has become less popular as it comes with some disadvantages, such as the slow trading speed and few people using these services, causing price slippage.

Fun side note: The first atomic swap ever was conducted between Decred and Litecoin in September 2017.

Non-custodial Wallets

A non-custodial wallet gives you full control over your funds because you own the key pair for your wallet. In other words, only you know the private key to unlock your wallet. However, when you lose the private key to your wallet, it means you lose full access to your wallet. Therefore, it’s important to store a backup key for your wallet somewhere safe if you forget or lose your private key. Unfortunately, you’re not the first person to lose the private key to its wallet.

Most people prefer to use custodial wallets hosted by exchanges. Often, these are shared wallets where exchanges store the funds for multiple users. They track each user’s balance in a centralized database. Of course, it’s much easier to access your wallet or retrieve access when you’ve lost it. However, you sacrifice security over the speed of use.

For people who trade on a daily basis, it makes sense to keep their funds in a centralized exchange. Just make sure to know the difference between both.

Sidechains – What is a sidechain?

Many blockchain platforms are trying to implement or have implemented sidechain technology to improve blockchain scalability. Blockchains have to meet an ever-growing demand for scalability. We can increase the block size to store more transactions per block. Unfortunately, that’s not an ideal solution as it requires more processing power to verify these blocks and broadcast them in a timely manner.

Therefore, sidechains allow for faster scaling without altering any of the properties of the underlying blockchain. A sidechain is a separate blockchain that is pegged against the mainchain. This means that both chains are interoperable. It allows assets from the sidechain to move to the mainchain and vice versa.

Using a sidechain, users can transact assets on this chain without congesting the mainchain. On top of that, there’s no requirement for mainchains to store each transaction that happened on the sidechain. Users can transact multiple times, and the mainchain will only store the final balance on its chain when the user decides to swap their assets to the mainchain again.

Layer 2 Blockchain: State Channels and Sidechains

We all know that blockchain technology is in search of scalability. Sidechains have proven to be a great solution. However, more and more developers started building Layer 2 scaling solutions. When we refer to Layer 2 scaling solutions, we talk about solutions built on top of an existing blockchain platform like Ethereum.

State channels are an example of a Layer 2 solution that allows users to execute multiple transactions but only record a single transaction. For instance, you agree with a person to pay $10 each day for an entire month. In this situation, you would pay 30 times a transaction fee and congest the network.

Therefore, you can use a state channel where you agree to keep track of all the separate transactions yourself and combine all payments at the end of the month. Instead of paying 30 times a transaction fee, you’ve only paid a transaction fee once.

Note that sidechains are also an example of Layer 2 scaling solutions. If you would like to learn more about Layer 2 solutions, check out the Plasma solution, where designated individuals make sure to move transactions from the Plasma chain to the mainchain.

Initial DEX Offering (IDO) – The New Way of Funding

The topic of IDOs has become wildly popular for raising capital among the crypto ecosystem. While ICOs were hot in 2017, an IDO is the new fundraising model of 2020 and forward.

Instead of selling tokens for a fixed price, an IDO allows investors to start trading the new token immediately. On top of that, liquidity pools make sure that the new token benefits from immediate liquidity. Many ICO projects have failed because there wasn’t sufficient liquidity causing extreme price slippage, negatively affecting the token price and often the project’s long-term survival.

Moreover, an IDO model allows for more fair fundraising because anyone can jump in and buy the token. No pre-sale that offers early investors a better price for their token. For that reason, investors enjoy the IDO model.

Turing Complete – What does it have to do with smart contracts?

A Turing Complete machine can run any program and solve any kind of problem given infinite resources and time. But why does this matter for blockchain technology?

In the early blockchain days, Ethereum branded itself as Turing Complete while Bitcoin is not. A computer needs to know if it can complete a specific action before executing it because applications run on multiple computers, and there’s no way to take them down. If we create an application that gets stuck in an infinite loop, the whole Ethereum network becomes useless because it consumes all resources. Therefore, by only allowing predictable – Turing Complete – smart contracts, we can predict the outcomes of contracts which is vital for the security of the Ethereum Virtual Machine.

Blockchain developers can use the Solidity programming language to develop Turing Complete smart contracts compatible with the Ethereum blockchain.

From the article of Michiel Mulders

Top Blockchain Trends in 2022

Top Blockchain Trends in 2022

Last year was a very fruitful year for the crypto community and cryptocurrency as a whole. Many things changed in the crypto world and many new users started to use cryptocurrency. It also gave a very good return to the investors of cryptocurrency.

But these are the things which happened last year. Now the question is what is the way forward in the coming year.

As we all know Cryptocurrency and the technology revolving around it i.e, blockchain builds trust and security for its user in the online world. And it is not only being used as Just the transaction thing but is also used in solving the many other problems around the world. It doesn’t have any border and you can say that it democratizes the thing in a very efficient and secure manner.

Now a day blockchains are used in multiple ways or say at multiple places, so the cryptocurrency in Smart Contracts, logistics, Supply Chain Origin, Security, Protection against identity theft, etc. We can say that blockchain can be used anywhere where there is a need for security and integrity where the database is accessed by multiple people. According to techJury during 2022, worldwide spending on blockchain solutions will reach $11.7 billion. Here I am going to discuss some of the trends that can happen in 2022 which will change the lives of many.

1. More countries may adopt Bitcoin and national cryptocurrencies:

In the year 2021 El Salvador became the first country to adopt bitcoin as legal tender means it can be used across the country to buy different types of goods and can also be used to pay salary.

According to many qualified persons in the bitcoin world says during 2022 a number of countries will adopt bitcoin and related cryptocurrencies as legal tender.

2. IOT integration with Blockchain:

As we all know blockchains ledgers are automated, encrypted, and are immutable. And this thing is necessary for IoT for its security and scalability. Blockchain could even be used for machine-to-machine transactions – enabling micropayments to be made via cryptocurrencies when one machine or network needs to procure services from another.

So in 2022, there might be pilot projects going on for IoT integration with blockchain.

3.Only 0.71% of the world's population use blockchain technology:

According to Edureka

Blockchain adoption statistics show that only 0.71% percent of the human population is currently using blockchain technology, or somewhere around 65 million people. According to even the most conservative estimates, this number is expected to quadruple in 5 years, and in 10 years, 80% of the population will be involved with blockchain technology in some form. That is millions of people will be added during the year 2022.

4. NFT is expanding beyond online Art:

We all have heard about Non-Fungible Token(NFT) and its growth during 2021. The prices of different NFT tokens sky-rocketed and NFT’s of different things came into existence. Many artists, actors from different industries, musicians jumped into the NFT world. Along with that, the metaverse thing by Facebook, Microsoft, and Nvidia created another level of hype around it in the market. So there will be plenty of opportunity during 2022 in the innovative use case of NFT.

And there will be many more things that will happen during 2022 in blockchain and the cryptocurrency world. Blockchain can be used in vaccine manufacturing and tracking, Supply chain and logistics management, and many more.

What is Rust Programming Language

What is Rust Programming Language?

What exactly is rust, and why is it so popular nowadays? You may have come across this question if you’re new to the world of computing. While Python and Java are still the most popular programming languages, Rust is quickly gaining traction. In this article, you will understand why Rust is so important. And finally how and where to start learning it.

About Rust

Rust is a popular programming language that was developed by Graydon Hoare at Mozilla Research with support from the community. It’s a statically typed, multi-paradigm, powerful, all-purpose programming language that was created to ensure superior performance and safety, and it works quickly and precisely. Rust is a programming language with a syntax comparable to C++ but no garbage collection.

It’s important to remember that Rust provides no-cost abstractions, generics, and functional features, which eliminates the majority of the problems that low-level language programmers experience. As a result, Rust is used to build a wide range of websites and applications, including Dropbox, Figma, NPM, Mozilla, Coursera, Atlassian, and many others. Additionally, Microsoft’s use of Rust for dependable and safety-critical development tools has bolstered the language’s reputation.

Why is Rust so popular?

1. Rust solves Memory Management Issues.

In-system programming frequently requires low-level memory management, and with C’s manual memory management, this task may be a real pain.

Rust has an amazing capacity to deliver convenience in the smallest aspects. It has full access to hardware and storage because it doesn’t need a garbage collector to run in the background.

This means that creating low-level code in Rust feels like programming a microcontroller. You have complete control over code updates without compromising memory safety.

2. Rust is excellent for embedded programming because of its low overhead.

Limited resources are common in embedded systems, which are commonly found in machines and home appliances. This is why low-overhead programming languages like Rust are necessary for embedded systems.

Rust is a resource-efficient and in-demand ingredient in embedded devices. It allows programmers to spot faults early on, preventing device failures.

The ability of Rust to generate zero-cost abstractions is the cherry on top. Rust is adaptable enough to accommodate any code abstraction you choose to use. You can use loops, closures, or whatever flavour of code you choose that day, and they’ll all compile to the same assembly without affecting your work’s performance.

3. Rust Makes It Easier to Create Powerful Web Applications

When it comes to choosing the right technology stack for web app development, the programming language is critical. There are a number of strong reasons to use Rust programming in your web app architecture.

If you’re used to creating web applications in high-level languages like Java or Python, you’ll love working with Rust. You may rest assured that code written in Rust will be error-free.

Anyone who knows C will find Rust to be a breeze to pick up. Furthermore, you don’t have to spend years learning the ropes before you can start dabbling with Rust.

Some of the key advantages of utilizing Rust for web development are as follows:

Rust can be compiled to WebAssembly, which makes achieving near-native web performance much easier.

Any language can compile to WebAssembly in Rust, allowing for portable, online-executable code.

4. Rust’s static typing makes it simple to maintain.

Rust is a language that is statically typed. All types are known at compile time when programming in Rust. Rust is indeed a strongly typed language, which makes it more difficult to develop the wrong programmes.

Successful programming relies on the ability to manage complexity. The complexity of the code increases as it expands. By allowing users to keep track of what’s going on within the code, statically typed languages provide a high level of simplification.

Rust also encourages long-term manageability by not requiring you to repeat the type of variable many times.

5. Rust is a high-performance material.

Rust’s performance is comparable to C++, and it easily outperforms languages like Python.

Rust’s rapid speeds are due to the lack of garbage collection. Unlike many other languages, Rust does not have runtime checking, and the compiler catches any incorrect code right away. This stops erroneous code from spreading throughout the system and wreaking havoc.

Furthermore, as previously said, Rust is lightning fast on embedded platforms as well.

6. Development and Support for Multiple Platforms

Rust is unique in that it allows you to program both the front-end and back-end of an application. The existence of Rust web frameworks such as Rocket, Nickel, and Actix simplifies Rust development.

Open Rustup, a rapid toolchain installer, and version management tool, and follow the instructions to develop with Rust. You can format the code in any way you want. Rustfmt automates the formatting of code using the standard formatting styles.

7. Ownership

Unlike many other languages, Rust has an ownership mechanism to manage memory that isn’t being used while the programme is running. It contains a collection of rules which the compiler verifies. In Rust, each value has a variable known as its holder. At any given time, there could only be one owner. When a variable passes out of scope, ownership is relinquished, which implies clearing the memory allocated to a heap when the variable could no longer be used. Unlike some other languages, the ownership laws offer benefits such as memory safety and finer memory management.